A 6-degree-of-freedom (6-DoF) pose localization method for a monocular camera in a 3D point-cloud dense map prebuilt by an RGB-D sensor.

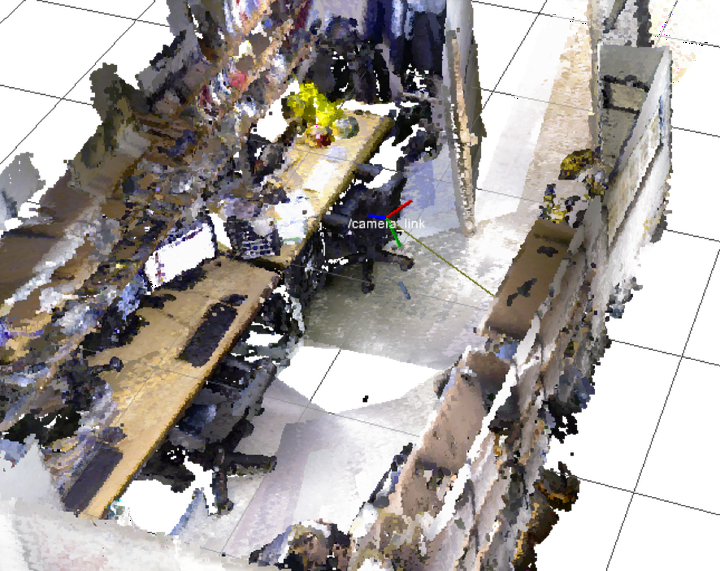

3D Map built a-priori with the RGB-D camera

3D Map built a-priori with the RGB-D camera

Abstract

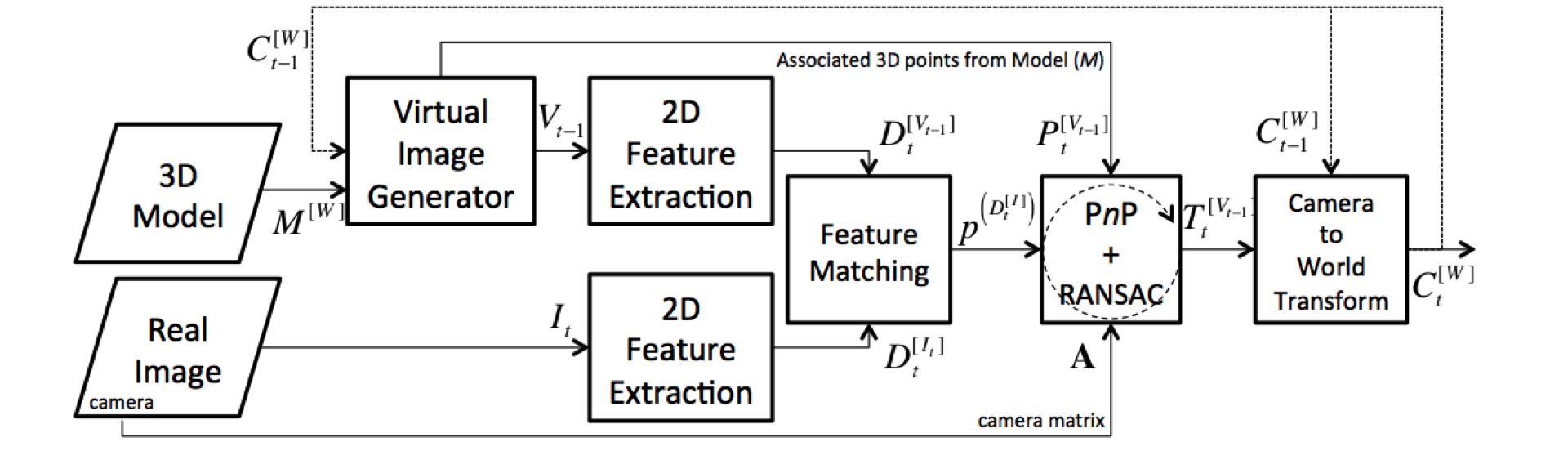

We present a 6-degree-of-freedom (6-DoF) pose localization method for a monocular camera in a 3D point-cloud dense map prebuilt by depth sensors (e.g., RGB-D sensor, laser scanner, etc.). We employ fast and robust 2D feature detection on the real camera to be matched against features from a virtual view. The virtual view (color and depth images) is constructed by projecting the map’s 3D points onto a plane using the previous localized pose of the real camera. 2D-to-3D point correspondences are obtained from the inherent relationship between the real camera’s 2D features and their matches on the virtual depth image (projected 3D points). Thus, we can solve the Perspective-n-Point (PnP) problem in order to find the relative pose between the real and virtual cameras. With the help of RANSAC, the projection error is minimized even further. Finally, the real camera’s pose is solved with respect to the map by a simple frame transformation. This procedure repeats for each time step (except for the initial case). Our results indicate that a monocular camera alone can be localized within the map in real-time (at QVGA-resolution). Our method differentiates from others in that no chain of poses is needed or kept. Our localization is not susceptible to drift because the history of motion (odometry) is mostly independent over each PnP + RANSAC solution, which throws away past errors. In fact, the previous known pose only acts as a region of interest to associate 2D features on the real image with 3D points in the map. The applications of our proposed method are various, and perhaps it is a solution that has not been attempted before.

6-DoF Pose Localization Algorithm

- The virtual view is constructed by projecting the map’s 3D points to a plane using the $t-1$ pose.

- 2D features are matched between the real and virtual images.

- 2D-to-3D point correspondences are obtained between the real camera’s 2D features and associated 3D points in the map.

- After Perspective-n-Point (PnP) + RANSAC, the relative 6-DoF transformation between the real and virtual cameras is found.

- A final frame transformation localizes the 6-DoF pose of the camera with respect to the map.