Fusing Optical Flow and Stereo in a Spherical Depth Panorama Using a Single-Camera Folded Catadioptric Rig

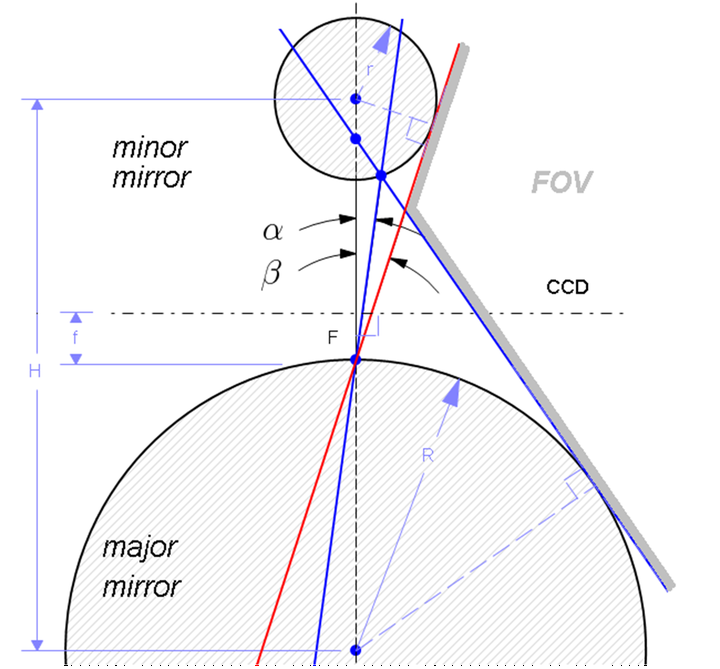

Spherical omnistereo geometric model

Spherical omnistereo geometric model

Abstract

We design a novel ‘folded’ spherical catadioptric rig (formed by two coaxially-aligned spherical mirrors of distinct radii and a single perspective camera) to recover near-spherical range panoramas (about $360^o$ x $153^o$) from the fusion of depth given by optical flow and stereoscopy. We observe that for rigid motion that is parallel to a plane, optical flow and stereo generate nearly complementary distributions of depth resolution. While optical flow provides strong depth cues in the periphery and near the poles of the view-sphere, stereo generates reliable depth in a narrow band about the equator instead. We exploit this dual-modality principle by modeling (separately) the depth resolution of optical flow and stereo in order to fuse them later on a probabilistic spherical panorama. We achieve a desired vertical field-of-view and optical resolution by deriving a linearized model of the rig in terms of three parameters (radii of the two mirrors plus axial distance between the mirrors’ centers). We analyze the error due to the violation of the single viewpoint constraint and formulate additional constraints on the design to minimize this error. We evaluate our proposed method via a synthetic model and with real-world prototypes by computing dense spherical panoramas of depth from cluttered indoor environments after fusing the two modalities (stereo and optical flow).